Achieving fairness in medical devices, Volume: 372, Issue: 6537, Pages: 30-31, DOI: (10.1126/science.abe9195)

When we think about racism, we often imagine people, systems, and institutions. But what we can fail to acknowledge is that technology—medical devices, algorithms, and machines—can also be racist. Technological advancements have often been hailed for their promise of objectivity and impartiality, offering a glimmer of hope in combating systemic biases. Yet technology is not immune to racism, nor is it immune to bias. On the contrary, history has repeatedly shown that even machines designed to be objective can inadvertently perpetuate discrimination and disparities.

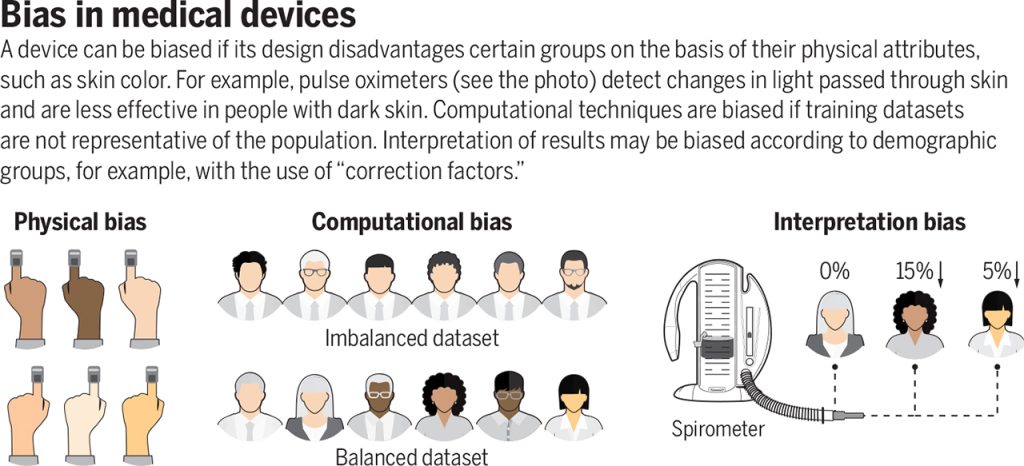

Racism in technology is not a distant concept for me. In the spring of 2021, I took part in the MIT linQ Catalyst Healthcare Innovation Fellowship—a unique program that brings together experts from various fields to collaboratively identify and validate unmet medical and health-related needs, explore new project opportunities, and develop action plans. Tasked with identifying an unmet need, I embarked on a search for a project and eventually stumbled upon a striking case study of “systemic racism in miniature”—pulse oximeters. Pulse oximeters are ubiquitous in medicine. Thousands of times a day, healthcare workers use them to measure the percentage of oxygen in the blood and make vital treatment decisions for conditions such as asthma, COPD, and now COVID-19. These devices were presumed to be unbiased tools, functioning uniformly across different racial and ethnic groups. Yet, both my own research and that of others revealed a disturbing reality: pulse oximeters were less accurate in patients with darker skin tones. A deficiency that not only can but already has led to potential misdiagnoses, delayed treatments, and compromised patient care.

This jarring revelation hits home even more when we recognize that oxygen is vital for brain health. The presence of racial bias in a tool meant to measure such a crucial substance for the brain’s well-being underscores just how easily systemic racism embedded in technology can go on to compromise the brain health of an entire community.

This ultimately poses a pressing question: What is the role of technology in health and society? Is technology a tool meant to help us progress or a weapon that will be used to oppress? The answer, I believe, hinges on how we wield it.

Today, the field of medicine, particularly neurology and mental health, is in the midst of a renaissance, with an unprecedented wave of technological innovation. The story of the pulse oximeter serves as a cautionary reminder that the technologies we develop now will invariably shape the brain health of tomorrow. Hence, it is imperative we remain vigilant in addressing and rectifying the systemic racism present in our technologies to ensure that the future of tomorrow will be one of equity and inclusion in brain health. Only then can we harness the true potential of technology as a tool of progression rather than a weapon for oppression.

Regrettably, the pulse oximeter case is just one example of systemic racism infiltrating technology. While technological innovations are relatively recent, there is already a troubling body of evidence revealing hidden biases within medical technology.

Bias in Algorithms: Algorithms that were intended to serve as impartial judges of health can end up perpetuating systemic racism and exacerbating inequalities. For example, an algorithm that used health costs as a proxy for health needs wrongly concluded that Black patients were healthier than white patients, replicating health care access patterns due to poverty. Similarly, diagnostic algorithms and practice guidelines that adjust or “correct” their outputs on the basis of a patient’s race or ethnicity, have been shown to guide medical decision-making in ways that may direct more attention or resources to white patients than to members of racial and ethnic minorities.

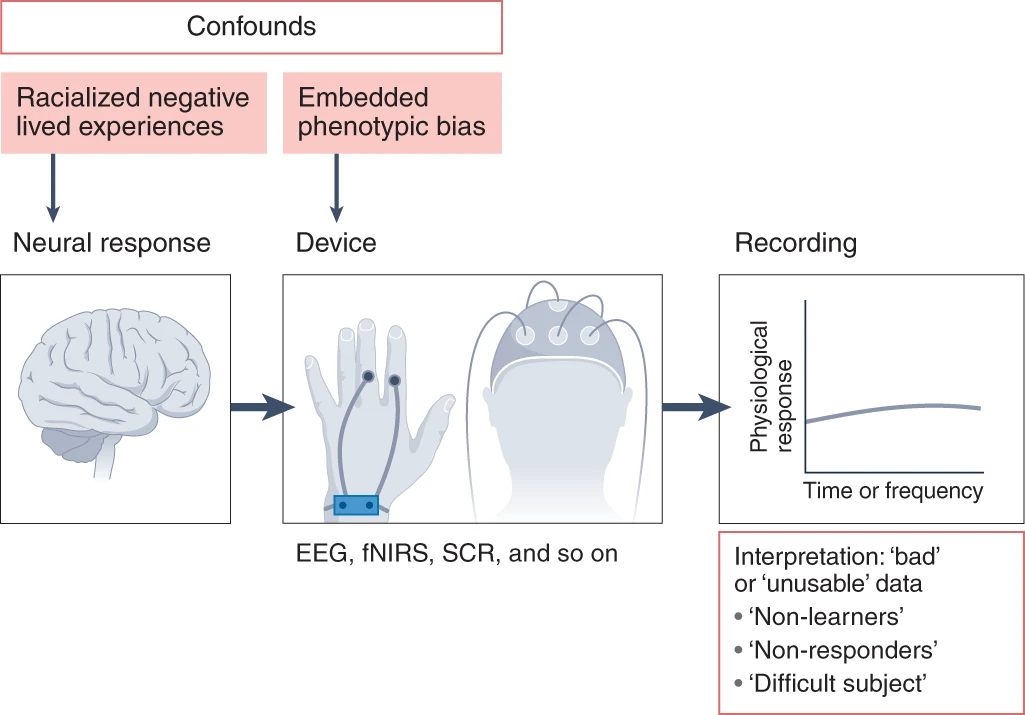

Fig. 3: The potential sources of racial bias in psychophysiological data collection.

Both effects of racialized negative life experiences on neural responses and embedded phenotypic bias (against darker skin and/or coarse, curly hair) in devices may influence recorded data. Historically, these confounds have not been considered, leading to the exclusion of Black participants from analyses and mislabeling participants as ‘non-learners’, ‘non-responders’ or ‘difficult subjects’. (Reference: Webb EK, Etter JA, Kwasa JA. Addressing racial and phenotypic bias in human neuroscience methods. Nature Neuroscience. 2022;25(4):410-414.)

This cascade of failures raises a crucial question: Who should be held accountable when technology fails? The commonly held belief that technology, due to its scientific and objective nature, is immune from human biases and errors need to be debunked. Technologies are not developed in isolation; they are products of human design, shaped by underlying identities, values, and objectives. Likewise, the social implications of technological tools reflect the larger system within which technology operates.

It stands to reason then, that if a technology is racist, it is because it is operating in a racist society. As Deborah Raji, a Mozilla Fellow in Trustworthy AI, eloquently states:

Therefore, accountability for technology failures lies not solely within the technology itself but within the technology designers and the broader system they operate within. Only by acknowledging this shared responsibility can we confront and rectify the systemic racism present in medical technology and strive toward a future of equitable and inclusive healthcare.

How, exactly, can we ensure that we are creating inclusive technology that does not suffer from the fatal pitfalls of system racism? Equity-centered design. Equity centered design emerges as the guiding light in the tumultuous sea of technological innovation. Understanding the complexities of diverse backgrounds, beliefs, and practices is the moral compass that could steer technology away from automating racism.

To accomplish this, we can implement the following strategies:

By implementing these strategies, we don the armor necessary for this epic battle. Only through cultural competency can technology be molded into an instrument of progress, promoting inclusivity and equal opportunities for all. Embracing equity-centered design allows us to navigate the challenges posed by systemic racism, paving the way for a future where technology becomes a catalyst for positive change, fostering inclusivity and equal opportunities for all.

Many things about the future are uncertain, but when it comes to technology, at least this much is known: it will continue to have a prominent role. But will its role be as a tool or as a weapon? The answer to that question depends in part on perspective—who wields power and how they do it. The future is not predetermined, but it is created. By taking race(ism) out of the equation through equity centered design, we can ensure that future technology is an ally, not a foe, on the battleground for equity in the fields of neurology and mental health. By leveraging inclusive technology as an antiracist tool for brain health, we can dismantle the barriers that systemic racism erects and pave the way for a future where every individual’s well-being is prioritized and respected. Only then can we truly race against the machine to build a world where technology is a force for progress and equality.

The mission of the Boston Congress of Public Health Thought Leadership for Public Health Fellowship (BCPH Fellowship) seeks to:

It is guided by an overall vision to provide a platform, training, and support network for the next generation of public health thought leaders and public scholars to explore and grow their voice.